Beyond the IDE: A Production-Ready Pattern for Secure Agentic Mcp Tool Integration

- Ken Wiltshire

- Jul 10, 2025

- 6 min read

A deep dive into the MCP Tool Proxy Pattern for enterprise-grade AI systems.

While IDE-integrated MCP implementations like Cursor benefit from the IDE’s security sandbox, direct LLM-to-MCP server integrations in production environments face far greater security exposure.”

Large Language Models (LLMs) are rapidly evolving from conversational chatbots into powerful reasoning engines. One of the most significant leaps in their capability is the use of tools — external functions and APIs that allow an LLM to interact with the world, query data, and take action. We’ve seen this paradigm flourish in IDEs, where tools can check code, run tests, or manage local files.

But what happens when we move beyond the developer’s desktop and into a production enterprise environment? The stakes change dramatically. Security, determinism, and efficiency become paramount. You can’t simply expose your internal APIs to an LLM and hope for the best. The risk of leaking sensitive data, such as API keys or customer information, is too high, and the cost of the LLM making a mistake on a critical parameter is too great.

Pattern anyone? MCP Tool Proxy Pattern, lets go with that and see if it sticks. It’s an architectural approach that bridges the gap between the non-deterministic, creative power of LLMs and the rigid, secure requirements of enterprise systems. It’s a pattern born from a very real-world production level challenge of making an LLM a first-class, secure client in a complex environment.

The Core Challenge: The LLM as a Privileged User

When an LLM uses a tool, it’s effectively acting as a user — or, more accurately, a privileged programmatic client. The problem is that LLMs are, by their nature, non-deterministic. They don’t “know” that a client_id must be a specific value or that an api_key should be kept secret. They only see a function's schema and make their best guess based on the prompt. Additionally MCP Server providers might (and should) require secure params to be passed in a secure manner, whether that is via token or oAuth etc. This must also include consideration for secure transport of the data. Much of this is not only difficult for a model to get right it would also simply be bad practice to take deterministic data and move it into the non deterministic world. Basic guiding principle when building agents.

Consider a simple tool for checking a customer’s order status:

{

"name": "get_order_status",

"description": "Retrieves the status of a customer's order.",

"parameters": {

"type": "object",

"properties": {

"order_id": { "type": "string" },

"customer_id": { "type": "string" },

"api_key": { "type": "string" }

},

"required": ["order_id", "customer_id", "api_key"]

}

}Exposing this schema directly to an LLM is problematic for several reasons:

Security Risk: The api_key is now part of the prompt context, making it visible to the model and potentially logged in various systems. This is a significant security vulnerability.

Wasted Tokens & Performance: The LLM has to reason about parameters like customer_id and api_key, which are often deterministic (i.e., they are fixed based on the current user's session). This wastes computational resources and increases latency.

Reliability & Hallucination: The LLM might “hallucinate” or guess an incorrect value for a deterministic parameter, leading to failed API calls and unpredictable behavior.

A Solution: MCP Tool Proxy Pattern

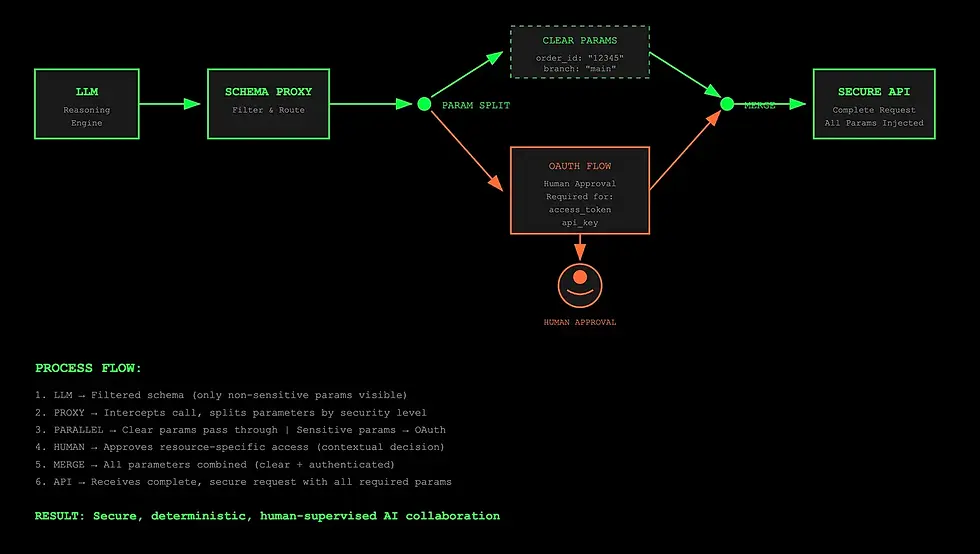

The MCP Tool Proxy Pattern introduces a trusted intermediary between the LLM and the tool server. This proxy has two primary responsibilities: filtering the schema presented to the LLM and injecting the necessary parameters before the tool is executed.

Let’s see how it transforms our get_order_status tool.

Step 1: Filtering the Schema

First, the proxy presents a simplified version of the tool’s schema to the LLM, removing all the deterministic or sensitive parameters. The LLM only sees what it needs to make a decision on.

Filtered Schema (What the LLM sees):

{

"name": "get_order_status",

"description": "Retrieves the status of a customer's order.",

"parameters": {

"type": "object",

"properties": {

"order_id": { "type": "string" }

},

"required": ["order_id"]

}

}Now, the LLM’s only job is to figure out the order_id from the user's query. It doesn't need to know about the customer_id or the api_key.

Step 2: Injecting the Parameters

When the LLM decides to call the tool with the order_id, the proxy intercepts the request. It then uses its own secure context (e.g., the user's session data) to inject the missing parameters before forwarding the complete, valid request to the tool server.

Here’s how that might look in code:

// The proxy's logic for handling the 'get_order_status' tool

const orderStatusProxy = {

// 1. Filter the schema for the LLM

filterSchema: (originalSchema) => {

const filteredSchema = { ...originalSchema };

// Hide sensitive and deterministic parameters

delete filteredSchema.parameters.properties.customer_id;

delete filteredSchema.parameters.properties.api_key;

return filteredSchema;

},// 2. Inject parameters before calling the tool

injectParameters: async (llmParams, userContext) => {

// Get the sensitive values from a secure source

const apiKey = process.env.ORDER_API_KEY;

const customerId = userContext.customerId;

return {

...llmParams, // The 'order_id' from the LLM

customer_id: customerId,

api_key: apiKey,

};

},

};This simple but powerful pattern elegantly solves our initial challenges.

Advanced Use Case: Tool-Specific Authentication and Human-in-the-Loop Workflows

The recent MCP Authorization Specification (March 2025) standardizes OAuth 2.1 flows, and the June 2025 updates classify MCP servers as OAuth Resource Servers with RFC 8707 Resource Indicators. These developments validate the need for patterns like MCP Tool Proxy — while the spec defines HOW to implement OAuth flows, our pattern addresses WHEN to trigger them and how to keep this complexity hidden from the LLM reasoning process.

The most powerful application emerges when handling tool-specific authentication flows within the MCP ecosystem, enabling true AI Native collaboration by seamlessly integrating human decision-making into LLM tool interactions.

Consider an MCP tool that needs to access a private git repository to analyze commit history:

{

"name": "analyze_git_commits",

"description": "Analyzes recent commits in a git repository for code quality patterns.",

"parameters": {

"type": "object",

"properties": {

"repository_url": { "type": "string" },

"branch": { "type": "string" },

"since_date": { "type": "string" },

"access_token": { "type": "string" }

},

"required": ["repository_url", "branch", "since_date", "access_token"]

}

}The challenge here isn’t general application authentication — this specific repository requires explicit permission for this particular operation. The LLM shouldn’t need to understand repository-specific OAuth flows or token management.

The MCP Tool Proxy Pattern

The proxy intercepts the tool call and orchestrates the human-in-the-loop authentication flow:

const gitAnalysisProxy = {

filterSchema: (originalSchema) => {

const filteredSchema = { ...originalSchema };

// Hide repository-specific auth complexity from the LLM

delete filteredSchema.parameters.properties.access_token;

return filteredSchema;

},injectParameters: async (llmParams, userContext) => {

const { repository_url } = llmParams;

// Check if we have permission for this specific repository

let accessToken = await getRepoAccessToken(repository_url, userContext.userId);

if (!accessToken) {

// This is tool-specific auth - launch human-in-the-loop flow

const authResult = await promptUserForRepoAccess({

repository: repository_url,

reason: "The AI needs to analyze commit patterns for code quality insights",

permissions: ["read:commits", "read:repository"],

userId: userContext.userId

});

if (authResult.approved) {

// User approved - complete the OAuth flow for this specific repo

accessToken = await completeRepoOAuth(repository_url, authResult.authCode);

// Store token for future use (with appropriate TTL)

await storeRepoAccessToken(repository_url, userContext.userId, accessToken);

} else {

throw new Error("Repository access denied by user");

}

}

return {

...llmParams, // The repo URL, branch, and date from the LLM

access_token: accessToken,

};

},

};AI Native Collaboration in Action

From the LLM’s perspective, the interaction remains clean and focused:

LLM sees and calls:

{

"name": "analyze_git_commits",

"parameters": {

"repository_url": "https://github.com/mycompany/private-repo",

"branch": "main",

"since_date": "2024-01-01"

}

}What actually gets executed after human approval:

{

"name": "analyze_git_commits",

"parameters": {

"repository_url": "https://github.com/mycompany/private-repo",

"branch": "main",

"since_date": "2024-01-01",

"access_token": "ghp_xxxxxxxxxxxx"

}

}The human collaboration happens seamlessly:

LLM expresses intent to analyze the repository

Proxy detects auth requirement for this specific resource

Human receives contextual prompt with full context

Human makes informed decision based on the specific request

OAuth flow completes deterministically in the background

Tool call proceeds to the MCP server without interruption

This enables sophisticated AI Native collaboration: fine-grained permissions per tool and resource, contextual human decision-making with full visibility, audit trails of resource access, seamless revocation of tool-specific permissions, and cross-team collaboration where different humans can approve different resource access.

The key insight is that the MCP Tool Proxy Pattern transforms the MCP ecosystem from a simple tool-calling framework into a platform for secure, human-supervised AI collaboration while maintaining the LLM’s conversational flow.

Benefits

Kind of went over these already so I’ll focus on the caveats cuz every pattern has at least one.

Tool level code maintenance. So much for the plug and play sales pitch eh? Well, yes, for sure. But this is not meant for the seamless world of Agentic IDE integrations. IDE’s still see the default set of tools and schemas as the MCP Server authors intended. Its only the direct Agent comms via MCP SDK, you know…..’production’. I’d say this is where it counts most regardless and plug and play typically flies in the face of security concerns.

Then of course is the extra code itself, but code is expendable these days so you should no longer be writing it in the first place. Let the Agent do the lifting.

You are free to adopt what you see here now it works fine as is but we are planning an official pull request to have the functionality embedded within the Client SDK itself.

Comments